why we should do that?

in web scraping sometime you found a case that the data in website you

scraped is updated every week ,every day ,or even every hours

is annoying if you must run the program again and again

why don’t we do it automatically 24/7?

what tools that we need?

1.vps

vps is virtual private server ,as like the name vps make we can run computer

virtually ,run the program ,save file ,edit file or deleting file

it is main core tools to do background process task

2.python

there is lot programming language but in this case i’m using python because

its simply and have package that we need to do web scraping

3.beautifulsoup

beautifulSoup is python library that make easy to scrap website ,its have html parser and that can searching , and modifying the parse tree.

4.request

request also python library, http request return a response object with all response data like content ,status ,encoding etc

5.schedule

getting started

first we should install package that we need

you can simply install python from the official website in here

and install all package we need using command :

pip install BeautifulSoup requests

if you already installed you can import the package

from bs4 import BeautifulSoupimport requests

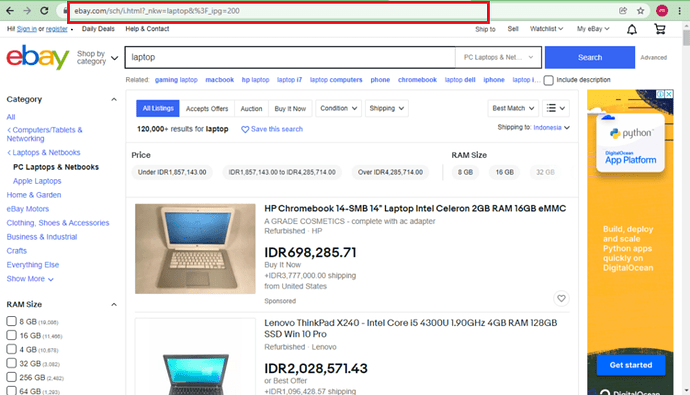

then created variable called url the website we want to scrape

url=‘Shop by Category | eBay’

you can look in url there is paramater _nkw and 3f_jpg

_nkw stands for value the search and 3f_jpg is for the data per page

after that we can create variable called params

params={“_nkw”:“laptop”,“3F_ipg”:“50”

}

Request HTTP

Next, we are going to get the URL and the page and parse them to html.parser:

req=requests.get(url,params=params)soup=BeautifulSoup(req.text,‘html.parser’)

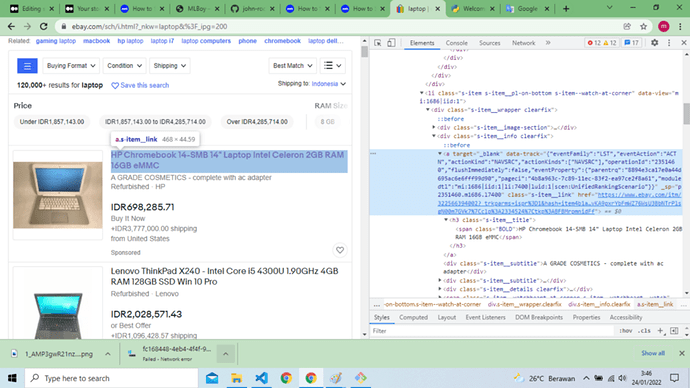

then we find all every product

products=soup.find_all(‘li’,’s-item s-item__pl-on-bottom s-item — watch-at-corner’)

because we using find_all method we get all “li” element in array

then we should looping all element into variable in product variable

for product in products:

and get child element product

for product in products:

link =product.find(‘a’,‘s-item__link’)[‘href’]

print(link)

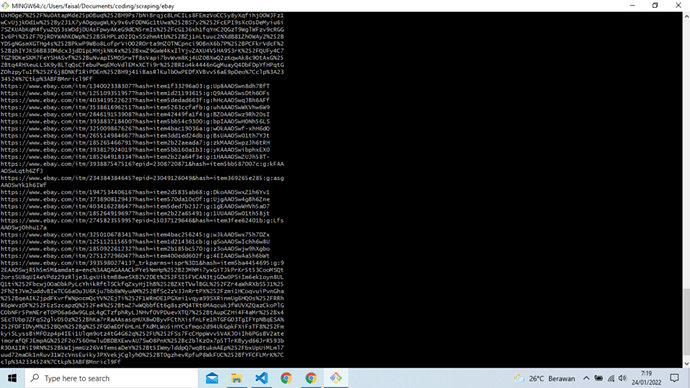

and that we got

and we will scrape every details product with the same ways

here the code:

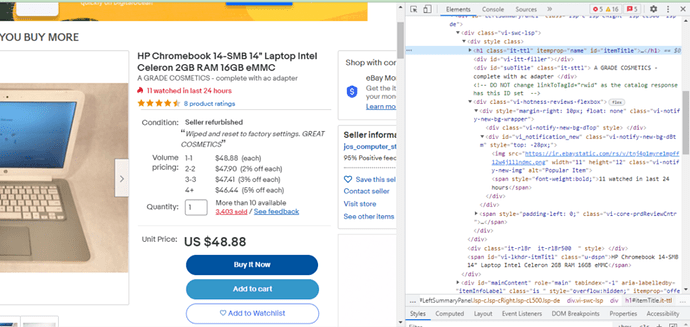

soup=BeautifulSoup(req.text,'html.parser')

title_product=soup.find('h1',{'id':'itemTitle'}).find(text=True, recursive=False)

try:

total_sold=soup.find('div',{'id':'why2buy'}).find('div','w2b-cnt w2b-2 w2b-red').find('span','w2b-head').text+' sold'

except AttributeError:

total_sold='not found'

condition=soup.find('div',{'id':'vi-itm-cond'}).text

try:

rating=soup.find('span','reviews-star-rating')['title'][0:3]

except (TypeError,AttributeError):

rating="rating not found"

price=soup.find('span',{'itemprop':'price'}).text

image_link=soup.find('img',{'id':'icImg'})['src']

data={

"title_item": title_product.encode('utf-8'),

"total_sold":total_sold,

"condition":condition,

"rating":rating,

'price':price,

'image_link':image_link

}

print(data)

and lets wrapping the code to the function and we exported data to json and the we naming the file by time created

and now we should install scheduler python using command :

pip install schedule

there is many option in schedule you can run the function every day/week/hour even every minutes ,so you can check in schedule documentation here

so we can import schedule and run the function ,in this case we run every hour , so we can add the code:

schedule.every(1).hours.do(get_product)while True:

schedule.run_pending()

time.sleep(1)

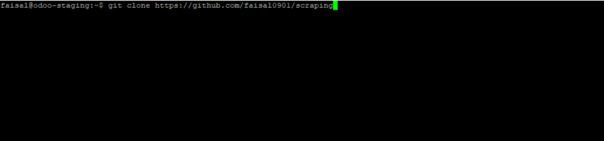

and now we deploy the code to vps ,there are several ways to deploy the code ,you can deploy manually using winscp or clone it from github

in this case clone it from github

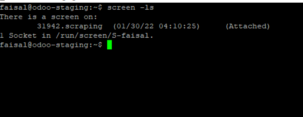

in this case we using ubuntu vps ,and we can use the screen command

screen -S scraping

and we have screen called scraping and we can go to the screen using command

screen -r scraping

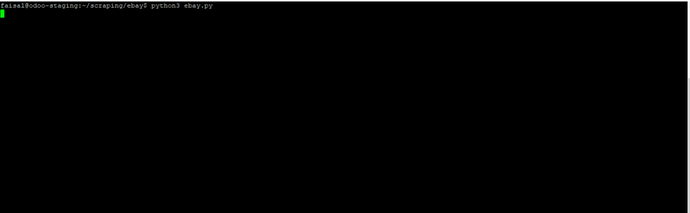

and run the code

and we can exit the screen by holding ctrl A+D

then you can leave the vps and do something else for few hours

after a few hours you can check the screen again by using command

screen -r scraping

and now you can see the program is still running

and the data created every hours in json format