Home is a necessity for everyone. We need a house to live in. Not infrequently, we are confused in choosing the right house. Many variables determine the selection of a house, such as the area, the number of bedrooms and bathrooms, the surrounding environment, and of course the price.

As people who are confused about choosing a house, we can determine a suitable house at a relatively low price in the two simple ways:

- Scrape all the search result from one the real estate website in your country and build a database from the data.

- Use the collected data to perform some analysis to get suitable properties and try to find undervalued properties.

In this discussion, I will focus on how to scrape a real estate website simply in python. The website I am using here is the real estate portal from trulia, a registered Trademark of Zillow, Inc. They have a very large amount of real estate listings for us to scrape. You can use different website according to your need, but you should be able to adapt the code I write very easily.

Before we begin with the code, let me just give you a summary of what I will do for scraping. I will use the results page from a simple search in trulia website where I can specify some parameters (like zone, price filters, number of rooms, etc), but I will not give some parameters. To reduce the task time I will just search the real estate listings in New York.

We then need to use a command to reach ask a response from the website. The result will be some html code, which we will use to get the elements we want for our final table. After deciding what to take from each search result property, we need a for loop to open each of the search pages and perform the scraping.

Now, lets begin to the code

Import the Modules

Like the most projects, we need to import the modules to be used. I will use BeautifulSoup module to get the data from html website and parse it. Requests module to request the access to the website and get the response from the website. Then, I use pandas to transform the data I scrape to be dataframe. The overall modules we need are as follows.

from bs4 import BeautifulSoup

import requests

import pandas as pd

import urllib.parse

HTTP Request

The website I will scrape is truila. Then I just type New York and search it. The url. The url that appears I use as the base url for scraping the data. The url shows 40 properties in one page. There are 334 pages in total.

website = ‘https://www.trulia.com/NY/New_York/'

Some websites automatically block any kind of scraping, and that’s why I’ll define a header to pass along the get command, which will basically make our queries to the website look like they are coming from an actual browser. When we run the program, I’ll have a sleep command between pages, so we can mimic a “more human” behavior and don’t overload the site with several requests per second. You will get blocked if you scrape too aggressively, so it’s a nice policy to be polite while scraping.

headers = ({'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36 Edg/96.0.1054.62'})

Then I request the access of the website like this.

response = requests.get(website, headers=headers)

If you print the response, it will show <Response [200]>. It means that the request has succeeded, the status response shows OK.

Soup Object

We have the good response from the website. Then we need to define the Beautiful Soup Object that will help us read this html. That’s what Beautiful Soup does: it picks the text from the response and parses the information in a way that makes it easier for you to navigate in its structure and get its contents. html.parser is a kind of parser that we use here to parse the html website easily.

soup = BeautifulSoup(response.content, ‘html.parser’)

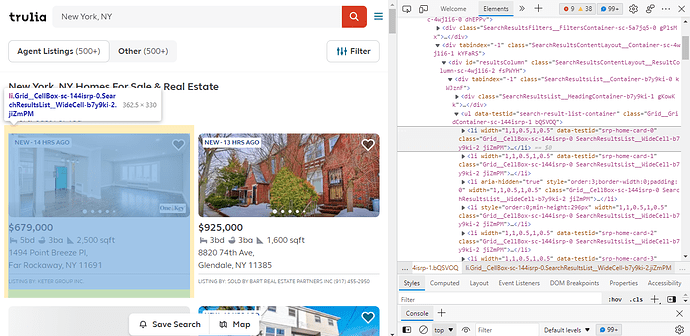

From the soup object, we need to check the element by inspect element. You can do it by right click the page and inspect it. Then we need to check the elements of each property listed. Each property has class attribute and value “Grid__CellBox-sc-144isrp-0 SearchResultsList__WideCell-b7y9ki-2 jiZmPM”.

Inspect the element of each property

We can get all of the data using code like this.

results = soup.find_all(‘li’, {‘class’ : ‘Grid__CellBox-sc-144isrp-0 SearchResultsList__WideCell-b7y9ki-2 jiZmPM’})

Get the Attribute

After that, we need to find data according to the requirements. We want to scrape the address, number of beds, number of bathrooms, and price. Once again, we have to inspect the element to find out the element of the data we want to retrieve. Then, we can append all the data into a specific list.

address = [result.find('div', {'data-testid':'property-address'}).get_text() for result in result_update]

beds = [result.find('div', {'data-testid':'property-beds'}).get_text() for result in result_update]

baths = [result.find('div', {'data-testid':'property-baths'}).get_text() for result in result_update]

prices = [result.find('div', {'data-testid':'property-price'}).get_text() for result in result_update]

Make a DataFrame

Then we can transform all the list we have to be dataframe. We use pandas module transform it easily like this.

real_estate = pd.DataFrame(columns=['Address', 'Beds', 'Baths', 'Price'])for i in range (len(address)):

real_estate=real_estate.append({'Address':address[i], 'Beds':beds[i], 'Baths':baths[i], 'Price':prices[i]}, ignore_index=True)

Save to Excel or csv File

If you want to save the dataframe to excel csv file, you can write your code like this.

#to Excel

real_estate.to_excel('real estate.xlsx')#to csv

real_estate.to_csv('real restate.csv')

That’s all I discuss in this article. Scraping data using beautifulsoup is very easy and can be used in many ways, not only in choosing the right real estate. The whole code that I made can be seen on my github.